Session 4

Introduction

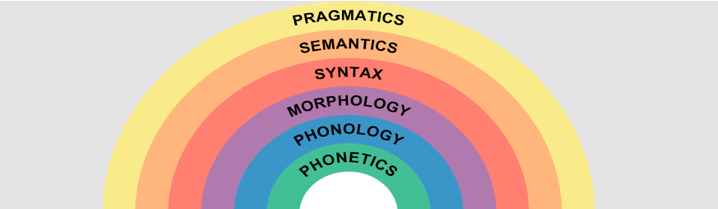

In this session, we will start discussing the implications that LLMs have on various adjacent fields. As their core task, language models are trained to produce human-like language; therefore, it is natural to look at LLMs from the perspective of linguistics — the study of human language.

On the one hand, linguistic theory about how humans use language and what the structure of human language is has informed investigations about what kind of linguistic capabilities language models represent once they are trained. On the other hand, the seemingly human-like performance of the models has led the field to discussing to which extent LLMs are human-like with respect to the mechanisms they employ in order to learn and use language in text form.

Both linguistically-informed perspectives on LLMs are active research areas and subject to ongoing debates. Therefore, the goal of this class is to provide a review of some classical tools used to investigate LLMs’ linguistic capabilities, as well as actively think about a snapshot of current discussions in the field. The learning goals for this session are:

- dive into “BERTology” to learn how LLMs represent “knowledge of language” and how to “unblackbox” them

- get acquainted with hands-on assessment of models’ linguistic knowledge

- develop opinions about whether LLMs are “human-like” with respect to language production, processing & learning.

Most importantly, this session is intended to encourage you to think critically about the methods used for investigating LLMs and the theoretical claims made about them in relation to various fields.

Slides

Prepared reading materials for the group assignment for the session can be found on Moodle in the directory “Session 4”. The original papers from which the readings are extracted as well as the opinion piece by Chomsky, Roberts & Watumull (2023) which is discussed in both papers can be found in the general “Reading materials” directory.

Code

Python script from the lecture for getting surprisals from GPT-3.

Grammaticality test dataset discussed in the lecture for testing the models.